How Game High-Protection CDN Defends Against SYN Flood Attacks – Defense Strategies

At 3 AM, game servers were suddenly hit by a SYN Flood attack! North American servers for "Fantasy Expedition" were fully red-alert, players couldn’t log in. Learn about practical defense solutions like SYN Cookie encryption, Linux kernel tuning (tcp_synack_retries=0), near-source traffic scrubbing, IP reputation database integration, and more. Discover how game operations withstood a peak attack

The only sound in the operations center at 3 AM was the hum of the AC unit. Cold air blew across workstations cluttered with empty coffee cups, leaving a thin layer of chill on the desks.

I was dozing off with my face against the keyboard, still feeling the faint glow from the screen, when my phone vibrated wildly in my pocket—my thigh went numb. It was an emergency alert from the monitoring system. The moment the screen lit up, a glaring red pop-up nearly swallowed the entire interface: "North American 'Fantasy Expedition' server cluster abnormal. Login success rate dropped below 20%. Latency exceeded 500ms."

I jerked my head up and looked at the monitoring screen on the opposite wall. My heart sank instantly.

What should have been rows of emerald-green server status indicators now looked like they’d been splashed with boiling red paint. All twelve nodes, from New York to San Francisco, were flashing red. Latency numbers jumped wildly on the screen, and login failure logs scrolled up by dozens per second.

I grabbed the mouse on the desk, my fingers flying across the cold keyboard as I switched to the in-game world channel backend. Thousands of complaints were already flooding in: "What the hell? I spent $2K on this account and can’t log in?" "Server crashed again? Get your act together!" "Five more minutes of this and I’m switching to 'Star Battlefield'—their servers never crash!"

I didn’t need to check the traffic analysis report to know it was another SYN Flood attack.

This thing always strikes during the late-night low-traffic hours, like cockroaches in the kitchen—showing up when you’re most exhausted to ruin your night.

I’d been through this before last year while doing on-site ops at the EdgeCast data center in LA. That time, at 2 AM, a North American e-commerce platform’s servers suddenly went down. I rushed into the server room with my laptop—the operation indicators on the cabinets were glaring red, and even the noise of the cooling fans screamed "overload."

As soon as I started the packet capture tool, the screen flooded with SYN packets from different IPs— nearly 10,000 requests per second. The server’s half-open connection queue shot from the default 1024 to its limit, and the system logs were filled with "tcp syn queue full" errors.

In a rush to fix things, I temporarily expanded the queue capacity to 4096. But within half an hour, the attack traffic doubled, and the queue filled up again. We ended up paying triple to rent a high-defense node, barely surviving the attack.

Getting hit by hackers is routine in the gaming industry, but defending against SYN Flood with high-protection CDN isn’t about brute-forcing with "more configuration."

Last week, I went to Seattle to help a small indie game studio adjust their defense strategy. Their tech director, Mark, dragged me to the server room, pointed at the configuration file on the screen, and said confidently, "I increased tcp_max_syn_backlog from 1024 to 8192. We should be fine now, right?"

I didn’t argue. Instead, I found an idle Alibaba Cloud server and used the hping3 tool to simulate a SYN attack, sending 500 spoofed-source IP SYN packets per second.

At first, Mark crossed his arms and smiled: "See? Latency only went up by 10ms. No big deal!"

But in less than two minutes, the test server’s CPU usage surged from 15% to 95%, ping times jumped from 12ms to 320ms, and the game login screen stuck on a white "Loading..." page.

Mark stared at the monitoring data in disbelief, unconsciously tapping the desk: "How did this happen? Isn’t the queue big enough?"

I pointed at the connection status graph on the screen: "The queue is bigger, but each half-open connection consumes memory to store IPs, ports, and other connection details. It also占用 CPU resources waiting for ACK responses."

Once the attack traffic doubled, system resources got eaten up, and legitimate player login requests couldn’t get through—like adding more tables to a restaurant but a crowd occupies them without ordering, leaving no room for actual diners.

The defense combo we use now was learned the hard way—costing over $500K when "Star Defense" launched.

The game had just entered open beta, and the user count exploded to two million. On the third day, we were hit with a SYN Flood attack peaking at 2.8 million pps. The servers were down for four hours.

Between compensating players with items, refunds, and the cost of临时租用 high-defense nodes, we lost over $500K. We also bled 15% of our early players. Just thinking about it still hurts.

That’s when we started developing this "layered defense" strategy.

The first move is the SYN Cookie mechanism, which solves the "half-open connections consuming resources" problem at its root.

Normally, the server allocates connection resources upon receiving a SYN packet, waiting for the client’s ACK. But SYN Cookie does the opposite—it doesn’t immediately allocate resources upon receiving a SYN. Instead, it uses the client’s IP, port, and a dynamic key only we know to compute a 64-bit "encrypted cookie" (Cookie value) via the SHA-1 hash algorithm. This value is placed in the sequence number of the SYN-ACK packet sent back.

If the client is legitimate, it will return this Cookie value unchanged in the sequence number of the ACK packet. When the server receives the ACK, it recalculates using the same algorithm. If the results match, it confirms a legitimate connection and only then allocates resources to establish it.

During a stress test on the Toronto node, we simulated a 100,000 SYN-per-second attack. Before enabling SYN Cookie, server CPU usage hit 99%, memory usage exceeded 70%, and login latency surpassed 300ms. After enabling it, CPU dropped to 40%, memory usage fell to 35%, and latency stabilized around 80ms.

But this method has its limits. When facing ultra-large attacks exceeding 500,000 pps, the encryption calculations themselves become a bottleneck, and CPU usage spikes again. That’s when the second move comes in.

The second move is Linux kernel stack tuning. The key isn’t "expansion" but "speedy release."

We’ve long adjusted tcp_max_syn_backlog to 16384, but what really matters is setting tcp_synack_retries to 0. By default, the server retries sending SYN-ACK 5 times while waiting for an ACK, with intervals increasing from 1 to 32 seconds—waiting up to 63 seconds to release a connection. That’s a pure waste of resources.

After setting it to 0, if an abnormal IP (e.g., from a reputation database blacklist) sends a SYN packet, the server immediately responds with an RST packet to切断 the connection, refusing to play the hacker’s waiting game.

Additionally, we shortened the timeout for SYN_RECV state from the default 60 seconds to 10 seconds. Even if some half-open connections slip through, they release resources after 10 seconds, tripling the speed of resource release during attacks.

Last year, during an attack in the Tokyo data center, I used Wireshark to capture packets and saw the hacker’s "SYN Killer" tool sending batches of SYN packets every 3 seconds. Our server instantly responded with RST packets. In less than 10 minutes, the tool popped up an error: "Too many connection rejections. Unable to continue sending."

My colleague Xiao Wang and I stared at the error message on the screen, laughing so hard we almost spilled our instant coffee on the keyboards—that hacker probably had never seen such a "no-nonsense" defense system.

The third move is "near-source deployment + layered filtering" at the traffic scrubbing center. This solves the "successful defense but players still lag" problem.

Last year, when "Mech Storm" mobile game’s North American servers were attacked, we initially routed all traffic to a scrubbing node in Virginia. As a result, West Coast players’ latency surged from 50ms to 180ms. The game forums were flooded with complaints: "Lagging like PPT," "Can’t use skills." Customer service lines were overwhelmed, and some players even posted screenshots of "uninstalling the game."

We quickly adjusted our strategy, deploying edge scrubbing nodes in West Coast cities like Los Angeles and San Francisco with high player density. Attack traffic is filtered locally first, and clean traffic is then transmitted back to the origin server via dedicated lines.

After this adjustment, West Coast players’ latency dropped below 70ms, complaints decreased by 90%, and daily active player churn rate fell from 8% to 1.2%.

The scrubbing strategy has two layers: during low traffic, use "SYN rate threshold" filtering—if a single IP sends more than 20 SYN packets per second, it triggers a CAPTCHA challenge. Hackers’ automated tools can’t recognize CAPTCHAs, so they get blocked. During high-volume attacks (e.g., over a million pps), switch to "behavior analysis mode," using AI models to detect connection behavior—legitimate players send SYN and promptly respond with ACK, while hackers’ attack packets only send SYN without ACK.

If over 80% of connections from an IP range are "SYN-only no-ACK," the system automatically blacklists the entire range into a "blackhole," saving resources from handling them one by one.

The most easily overlooked move is the fourth—IP reputation database integration. This helps us "prepare for rain before it falls."

We now work closely with three CDN providers: Cloudflare, Akamai, and Alibaba Cloud. They update their global malicious IP databases hourly. Any IP that has launched HTTP Flood, DDoS, or SYN attacks is recorded and synced to our defense system.

Last time, a Brazilian node detected an IP launching an HTTP Flood attack. Within five minutes, that IP was synced to our SYN defense blacklist. Two hours later, that same IP tried to send SYN packets to the North American "Fantasy Expedition" servers—it was blocked after just 3 packets.

An even more efficient interception: last November, we noticed attack sources heavily using cloud host IPs from AWS’s Oregon region. Checking the reputation database, we found this IP range (about 23,000 addresses) had launched three SYN attacks in European and Asian nodes over the past week. Moreover, player login records for these IPs showed only 0.8% had正常 logins in the last 7 days.

We blacklisted the entire range. Immediately, scrubbing node resource usage dropped from 82% to 16%. The ops team saved half a day by not manually filtering malicious IPs.

At 4:03 AM, the red alerts on the monitoring screen finally faded one by one. Green "Normal" indicators spread from San Francisco to New York.

Traffic analysis reports showed the attack peaked at 3.47 million pps, but player login latency remained stable under 83ms, and login success rate recovered from 17% to 98.6%.

I slumped into my chair, downed my third cup of black coffee, and felt my tense nerves relax slightly as the bitter liquid slid down my throat.

My colleague Xiao Wang walked over with a cup of hot milk and placed it on my desk: "All handled? I thought we’d have to switch to backup servers."

I shook my head, pointing at the defense logs on the screen: "Thankfully, the Cookie mechanism and reputation database held up. Didn’t need the backup nodes."

Xiao Wang smiled and patted my shoulder: "These hackers are persistent, attacking at 3 AM without sleep."

I opened a bookmarked hacker forum page. The "SYN Ghost 3.0" tool I saw last week was still pinned. The poster claimed it could forge Cookie values to bypass detection, and the attached test video showed it successfully breaching a small game’s servers.

"Persistence is one thing, but we can’t let our guard down."

I turned the screen toward Xiao Wang: "Download this tool tomorrow, unpack it, and analyze how it works. Also, update the server’s encryption keys to dynamic daily rotation—every time hackers update their tools, our defenses must level up too."

After all, game security is a never-ending game of cat and mouse. Players don’t care if it’s a 3 million pps SYN Flood or some new attack method—if lag lasts over ten seconds, they might delete the game and switch to a competitor.

Last time, "Fantasy Continent" lost 5% of its daily active players due to a 20-second lag. It took two months of campaigns and nearly a million dollars in rewards to win them back—costing three times more than investing in defense.

The ops department wall has a red slogan: "10 seconds of lag for players is a 100-point crisis for us."

So we have an unwritten rule: no matter what time it is, if the monitoring alarm goes off, ops must be on site within 30 minutes and propose a initial defense plan within 10 minutes. We’d rather build three layers of redundant defense than let players feel even a second of lag.

Dawn was breaking outside. The first rays of sunlight filtered through the blinds of the ops center, casting slender streaks of light on the monitoring screen.

I looked at the steady green curves on the screen, took a sip of hot milk, and knew clearly: this battle was only temporarily over. The next alarm could come tomorrow凌晨 or the afternoon after. But as long as players look forward to logging into the game, we must hold this red line of defense.

Share this post:

Related Posts

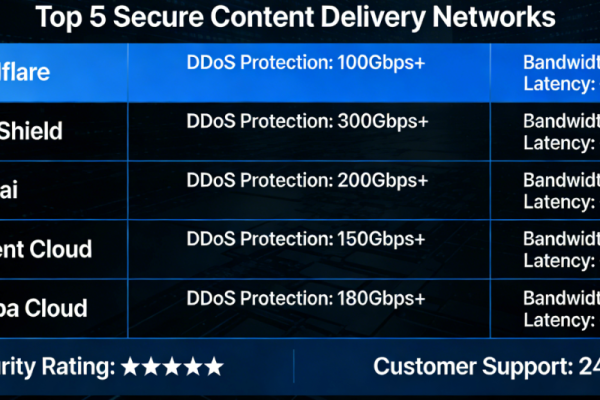

Hong Kong High-Defense CDN Recommendations (2026 Latest Edition)

Not all Hong Kong high-defense CDNs can withstand attacks. This article compares the protection stre...

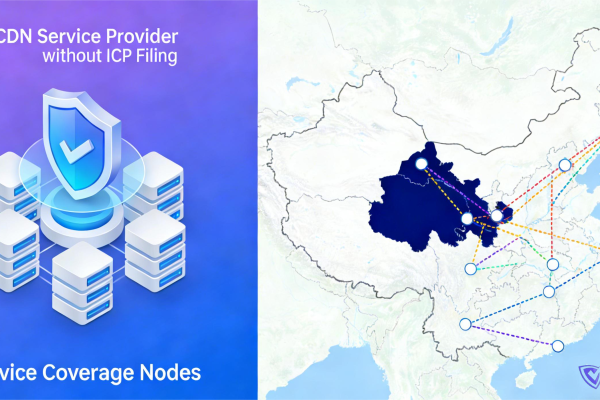

No-ICP CDN Recommendations | Which Ones Actually Speed Up Mainland China AND Can Withstand Attacks?

How to choose a no-ICP CDN? Based on real webmaster tests, this article compares multiple CDN provid...

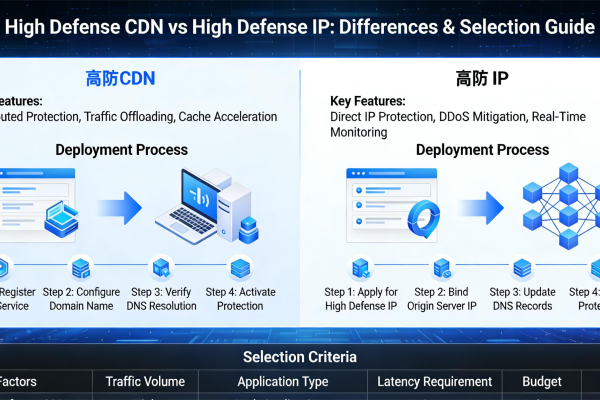

What's the Difference Between DDoS-Protected CDN and DDoS-Protected IP? A Clear Guide to Help You Choose.

What's the difference between a DDoS-protected CDN and a DDoS-protected IP? Which one should your we...